Best CSAT survey questions: Expert examples and implementation tips

CSAT surveys help you understand whether customers are satisfied with your support, and where you can improve the customer experience. Learn about 18 key questions to ask in CSAT surveys, when to ask them, and how to design surveys with high response rates.

Updated February 9, 2026 | 8 min read

Customer satisfaction scores (CSAT) are often one of the first metrics support teams use to track customer experience. CSAT surveys are easy to implement and analyze, but you have to know which questions to ask and when to gather feedback.

In this guide, we'll cover 18 CSAT questions for B2B support teams, when to ask them, and how to factor this data into your customer retention strategy.

What makes an effective CSAT survey question?

CSAT stands for "customer satisfaction score." It's a survey that measures how satisfied your customers are with specific interactions, product features, or their support experience. The best CSAT questions focus on one clear moment (like a particular support conversation or feature) instead of asking vague questions about their overall satisfaction.

You might remember being stumped by surveys that asked you, "How was everything?" That's a broad question that makes it difficult for customers to provide useful feedback. Instead, effective CSAT questions are targeted and specific.

Here are a few characteristics of efficient CSAT questions:

- Clear language: Skip jargon so customers immediately understand what you're asking

- Specific focus: Ask about one interaction or feature at a time

- Actionable answers: Frame questions so the responses tell you exactly what to fix

Once you've figured out what to ask, the timing matters just as much as the question itself. Send your survey within hours of the interaction you're measuring, while the experience is still fresh.

18 CSAT questions to measure customer satisfaction

Here are 18 examples of CSAT questions for B2B support teams, categorized by type and scoring method.

Rating scale questions

These questions use a numbered scale — typically from 1 to 5, or from 1 to 7 — to measure satisfaction. They're quick to answer and easy to track over time.

- How satisfied are you with our product?

- How would you rate the support you received today?

- How satisfied are you with the speed of our response?

- On a scale of 1 to 5, how would you rate your overall experience?

- How satisfied are you with the quality of our product?

- How would you rate the professionalism of our team?

Most teams use 5-point scales because they're simpler for customers to answer and easier for teams to measure. When you calculate your score, customers who select 4 or 5 count as "satisfied."

Binary satisfaction questions

There are question yes/no or satisfied/unsatisfied answers. They're even quicker to answer than rating scale questions, so they work best right after support interactions where you want high response rates.

- Did we resolve your issue?

- Did our product meet your expectations?

- Were you satisfied with how we handled your request?

- Was our response helpful?

You lose nuance with binary questions, but you definitely get speed. They're perfect for quick pulse checks after every support ticket.

Multiple choice questions

Multiple choice questions provide predefined answer options to understand specific aspects of the customer experience. They help you spot patterns across your customer base.

- Which feature do you use most often?

- How often do you use our product? (Daily / Weekly / Monthly / Rarely)

- What was the primary reason for contacting support? (Bug / Question / Feature request / Other)

- Which communication channel do you prefer? (Email / Slack / Chat / SMS)

With multiple choice, you want to be intentional about offering enough options so most of your customers' common scenarios are covered — while keeping the list short enough to scan quickly.

Open-ended feedback questions

Open-ended questions let customers share detailed thoughts in their own words. They take longer to analyze but provide insights that rating scales miss.

- What could we improve about your experience today?

- What did you like most about working with our team?

- What would make our product more valuable to you?

- Is there anything else you'd like us to know?

Generally, you want to pair open-ended questions with a rating question to create effective feedback loops. Customers who give low scores often provide the most valuable feedback when you ask an open-ended follow-up.

CSAT survey questions examples by touchpoint

The same CSAT question might get you different answers depending on when you ask it during the customer journey. Here's how to match questions to the right moments.

Post-support interaction questions

Send these immediately after closing a support ticket, ideally within one to two hours:

- How easy was it to resolve your issue today?

- How satisfied are you with the support you received?

- Did our team resolve your problem completely?

- How would you rate the speed of our response?

Post-support questions measure support quality and help you spot team members who consistently deliver great experiences. They also reveal systemic issues in your support process.

Product experience questions

Ask these during regular product usage to understand ongoing satisfaction:

- How satisfied are you with [feature name]?

- How easy is our product to use?

- Does our product help you accomplish your goals?

- How would you rate the reliability of our product?

Make sure to space these out to avoid survey fatigue: maybe you send out product experience surveys quarterly, or after major product milestones. You want to track satisfaction trends without annoying your customers.

Onboarding satisfaction questions

Send these after customers complete key onboarding milestones:

- How satisfied are you with our onboarding process?

- Did our onboarding guides meet your needs during setup?

- How easy was it to get started with our product?

Early satisfaction signals predict long-term retention. Catching friction during onboarding helps you identify at-risk accounts early on.

Feature release questions

Gather targeted feedback when you ship new features:

- How satisfied are you with [new feature name]?

- Does this feature solve the problem you were facing?

- How likely are you to use this feature regularly?

This feedback loop helps product teams validate roadmap decisions and prioritize improvements based on actual customer reactions instead of assumptions.

How to format your CSAT surveys

While you're deciding which questions to ask customers, you also need to understand where to send out the surveys — in order to actually get responses. Here's what works best for B2B support teams trying to maximize response rates.

Quick rating questions

Single-click satisfaction ratings take seconds to answer. They work best embedded directly in emails or in-app chat widgets, where customers can respond without opening a new page.

The tradeoff is limited context. You'll know someone is dissatisfied, but you won't know why without follow-up questions.

Contextual follow-up questions

Conditional questions appear based on previous answers. For example, you might only who the question "What went wrong?" after a customer rates their experience poorly. This approach keeps surveys short for satisfied customers while gathering detailed feedback from detractors.

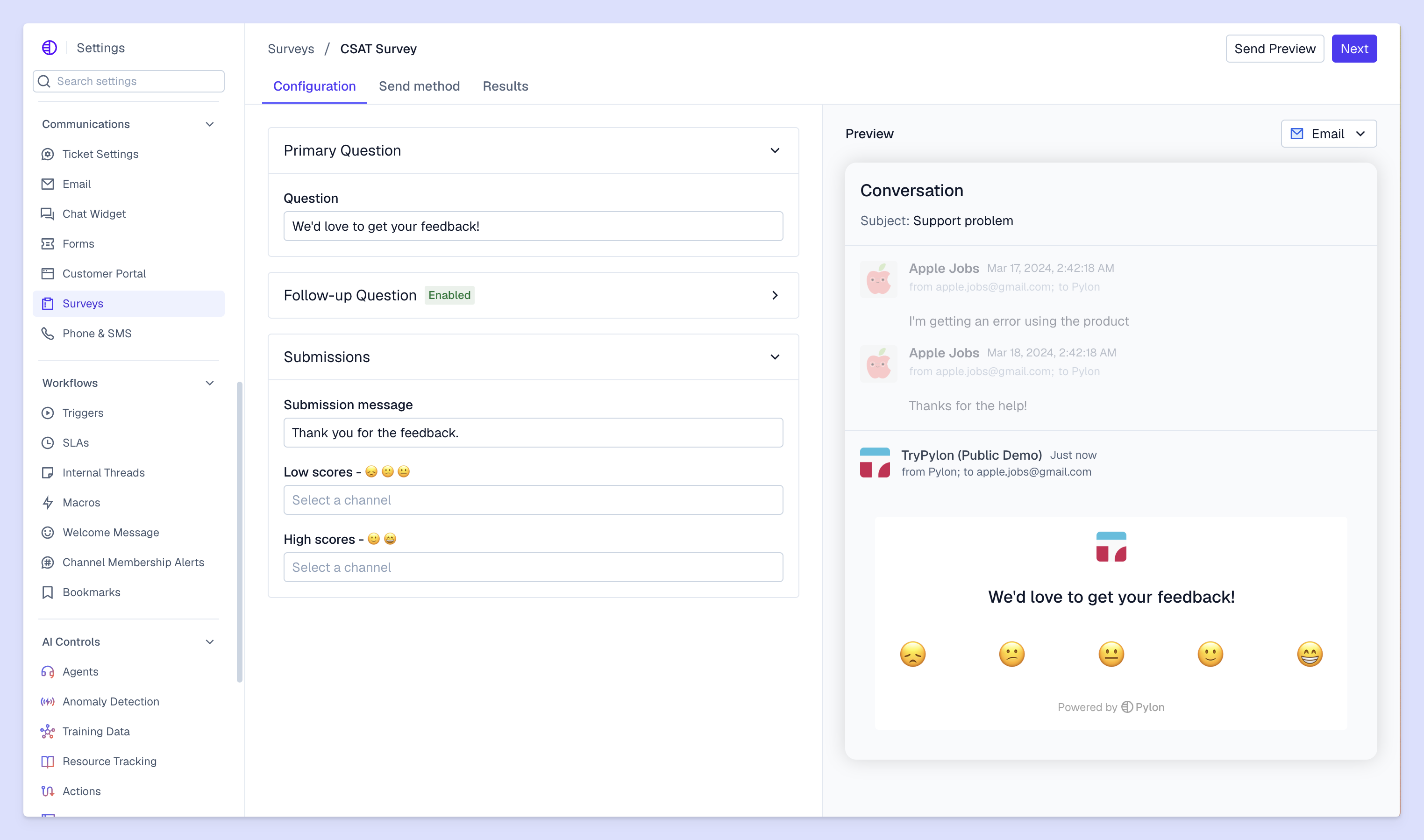

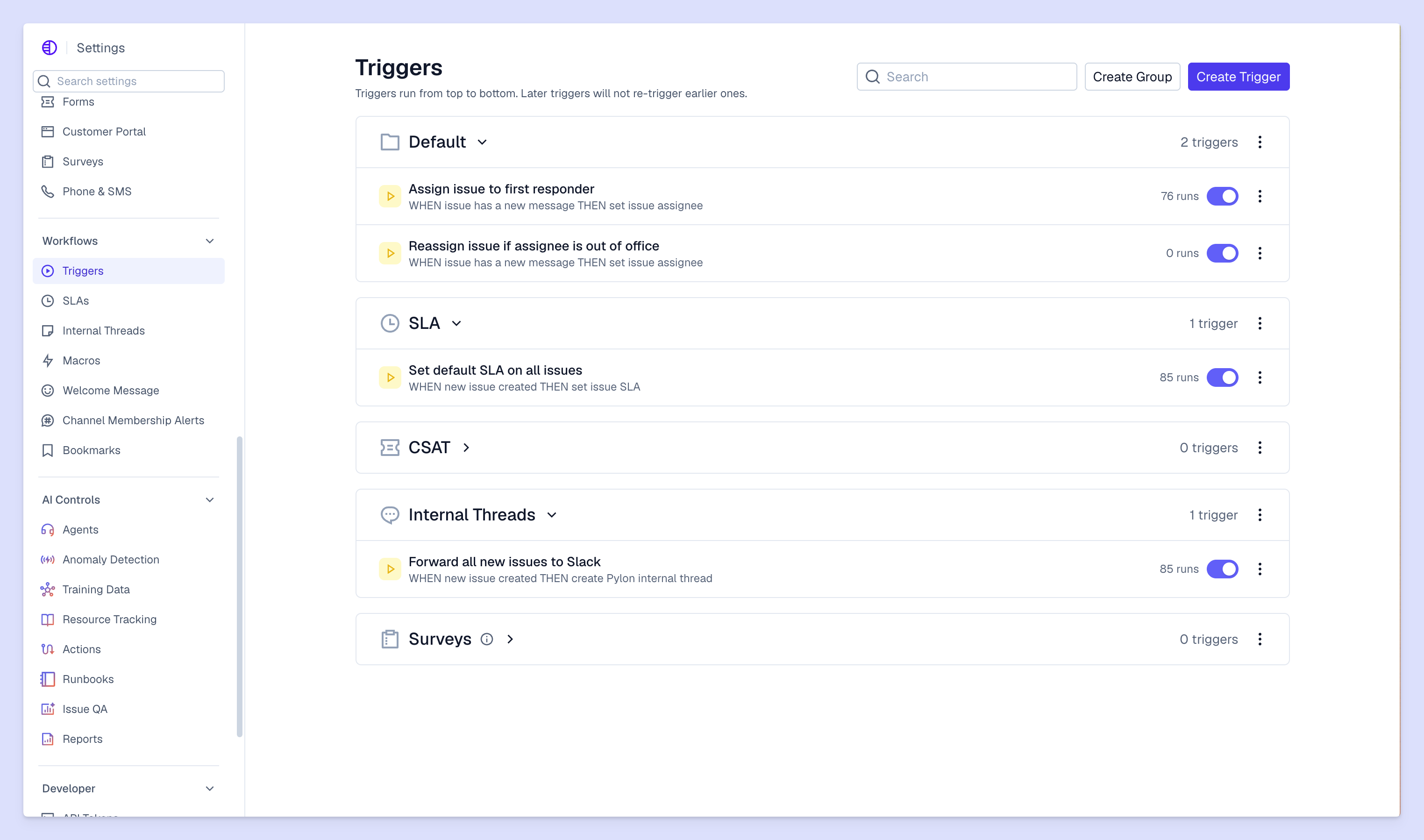

With AI-native support platforms, you can build CSAT surveys that automatically trigger follow-up questions based on certain answers. You collect context without adding extra steps for every customer.

Actionable feedback questions

Questions that ask "What would you change?" or "What would improve your experience?" turn satisfaction data into specific next steps. Instead of just knowing a customer is unhappy, you learn exactly what to fix.

Pair actionable questions with your rating questions so you can prioritize improvements based on both frequency and impact on satisfaction scores.

How to calculate your CSAT score

As soon as you've started collecting data, you'll need to understand how CSAT is calculated.

Simply put, your CSAT score is the percentage of satisfied customers out of everyone who responded. The formula is: (number of satisfied customers ÷ total responses) × 100.

You define "satisfied" based on your rating scale. For a 5-point scale, customers who select 4 or 5 count as satisfied. For a 7-point scale, any answer 5 or above typically counts as satisfied.

Here's an example: 80 customers respond to your survey. 68 of them select 4 or 5 — on a 5-point scale. Your CSAT score is (68 ÷ 80) × 100 = 85%.

Track your score over time and by segment — customer tier, product feature, or support team member — to spot trends and opportunities.

CSAT survey best practices for B2B teams

Getting customers to complete surveys and then acting on the feedback requires intentional design. Here's what works for many B2B support teams:

- Keep surveys short: Three to five questions maximum, like one rating question and optional follow-ups

- Send at the right moment: Trigger surveys immediately after key interactions while the experience is fresh

- Make surveys easy to access: Embed rating scales directly in email or support channels instead of requiring clicks to external forms

- Close the loop: Follow up on negative feedback within 24 hours to show customers you're listening

- Segment by account: Track satisfaction across customer tiers to identify patterns in high-value accounts

With modern B2B support platforms like Pylon, you can automatically trigger CSAT surveys after key support interactions across Slack, email, in-app chat, or even embedded in your knowledge base. Then, track and report on those scores using Pylon's analytics dashboards.

Common CSAT mistakes that negatively impact response rates

Even well-intentioned surveys won't do well with customers when you're asking too much or asking at the wrong time. Here are common mistakes that can affect your response rates:

- Asking too many questions: Surveys longer than five questions typically see a significant drop in completion rates

- Using confusing language: Technical jargon or compound questions make customers abandon surveys mid-completion

- Poor timing: If you send surveys days after an interaction, they often get ignored because customers have moved on

- No follow-up: Customers stop responding if you never acknowledge their feedback or explain what changed

- Survey fatigue: Sending surveys after every minor interaction trains customers to ignore all your requests

The takeaways: Send surveys at intentional times or for key moments, ask the right number of questions, and always close the loop when customers take time to respond.

Transform CSAT data into customer success strategy

CSAT scores can be really valuable when you use them to inform your support and retention efforts going forward. Here's how to turn satisfaction feedback into action:

- Identify at-risk accounts: Customers with consistently low CSAT scores often churn within 90 days, especially if satisfaction drops suddenly

- Prioritize product improvements: Tie feature requests to product satisfaction feedback, so you can focus on building features that improve satisfaction

- Personalize support approaches: Use satisfaction history to adjust how your team communicates with specific accounts

- Measure team performance: Track CSAT by team member to identify coaching opportunities and celebrate great work

The most effective B2B teams unify CSAT data with other customer signals — like product usage, support ticket volume, and feature requests — to build complete account-level intelligence. When satisfaction drops at a high-value account, you want to know immediately and understand the full context.

Pylon is the modern B2B support platform that offers true omnichannel support across Slack, Teams, email, chat, ticket forms, and more. Our AI Agents & Assistants automate busywork and reduce response times. Plus, with Account Intelligence that unifies scattered customer signals to calculate health scores and identify churn risk, we're built for customer success at scale.

FAQs

How often should you send CSAT surveys to B2B customers?

Send CSAT surveys after significant interactions like support ticket resolutions or onboarding milestones. In general, avoid surveying the same customer more than once per month to avoid survey fatigue.

What is the ideal length for a CSAT survey?

Keep CSAT surveys to three to five questions maximum. Include one primary satisfaction rating question, plus two or three optional follow-ups for context.

How do CSAT scores predict customer churn risk?

Consistently low CSAT scores from an account indicate dissatisfaction that often results in churn. The signal becomes stronger when combined with decreased product usage or increased support ticket volume.

What is the difference between CSAT, NPS, and CES?

CSAT measures satisfaction with specific interactions, product features, or service. NPS (net promoter score) measures a customer's likelihood to recommend your company. CES (customer effort score) measures how easy it was for the customer to complete a task.

What is a good CSAT score for B2B companies?

B2B companies typically aim for CSAT scores above 80%. Benchmarks vary by industry and the specific touchpoint you're measuring.